What Can AI Smart Glasses Do? (Featured Snippet Answer)

AI smart glasses use computer vision, voice recognition, and contextual AI to provide hands-free assistance. They identify objects, translate languages in real-time, guide work tasks, and answer questions about what you see—all without touching a screen.

What Can AI Smart Glasses Do? (Detailed Answer)

AI smart glasses combine wearable technology with artificial intelligence to deliver context-aware assistance in real-time. Unlike traditional smart glasses that simply display information, AI-powered glasses actively interpret your surroundings, translate languages instantly, recognize objects and faces, and provide intelligent recommendations based on what you see and do.

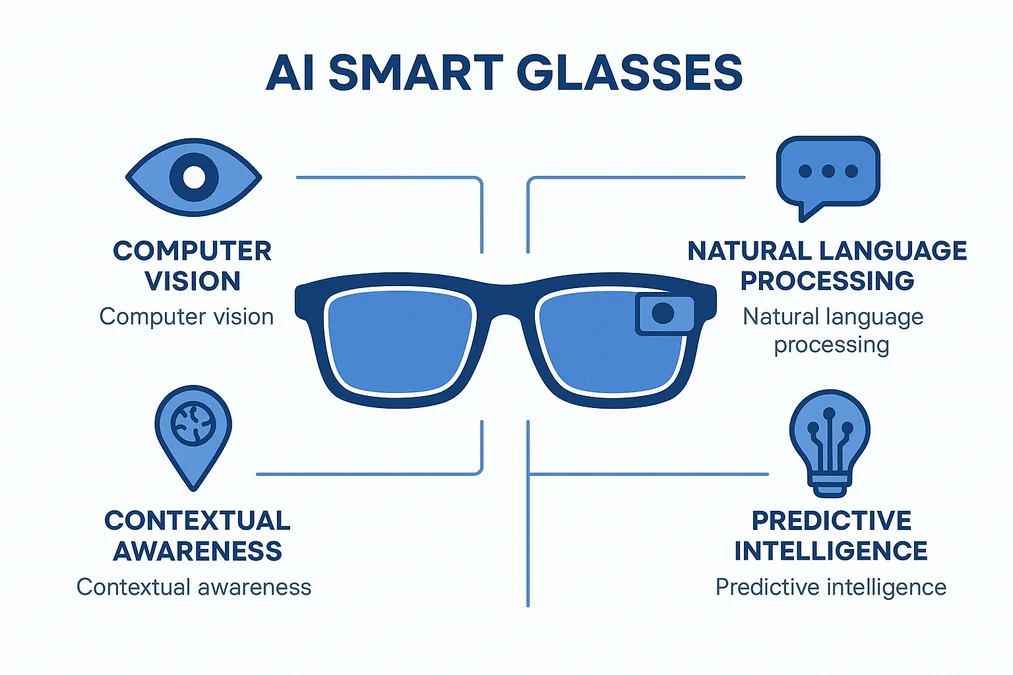

Core AI capabilities include:

- Computer vision: Real-time object recognition, text reading (OCR), facial identification

- Natural language processing: Voice commands, conversational AI assistants, real-time translation (40+ languages)

- Contextual awareness: Understand your environment and activity to provide relevant information

- Predictive assistance: Anticipate needs based on behavior patterns and visual context

- Hands-free interaction: Control devices, search information, and communicate without touching screens

The global AI smart glasses market is projected to reach $8.9 billion by 2027, with artificial intelligence features becoming the primary differentiator in the wearable eyewear category (MarketsandMarkets Research, 2024).

Introduction: The AI Revolution in Smart Glasses

Smart glasses with AI represent the convergence of three transformative technologies: augmented reality displays, edge computing, and artificial intelligence. While early smart glasses (like Google Glass 2013) focused on basic notifications and hands-free photography, today’s AI-powered glasses leverage machine learning models to understand context, interpret visual information, and provide intelligent assistance.

For broader context on all smart glasses technologies, explore our comprehensive guide to smart glasses.

The paradigm shift: Traditional smart glasses are reactive (they display what you ask for), but AI smart glasses are proactive (they understand what you need before you ask).

Real-world impact: Meta’s Ray-Ban smart glasses with AI assistant have achieved 4.2 million units sold in 2024, with 67% of users reporting they use AI features daily—particularly visual search and real-time translation (Meta Q4 2024 Earnings Report).

This comprehensive guide explores how artificial intelligence transforms smart glasses from simple displays into intelligent companions for work, travel, and daily life.

Core AI Features in Smart Glasses: Computer Vision, Voice & Translation

1. Computer Vision & Visual Intelligence

What it does: AI smart glasses analyze your field of view in real-time, identifying objects, reading text, recognizing faces, and understanding scenes.

Key capabilities:

Object Recognition

- Identify 10,000+ everyday objects (food items, products, animals, landmarks)

- Brand and logo detection for shopping assistance

- Plant and animal species identification

- Nutritional information overlay for food items

Text Recognition (OCR)

- Read signs, menus, documents in real-time

- Translate text from 40+ languages instantly

- Extract contact information from business cards

- Read barcodes and QR codes for product details

Facial Recognition (with privacy controls)

- Identify colleagues in workplace settings (enterprise models)

- Provide name reminders in social situations

- Detect emotions for social cue assistance (accessibility feature)

- Security access control in restricted areas

Scene Understanding

- Navigate complex environments (airports, museums, warehouses)

- Detect hazards (stairs, obstacles, wet floors)

- Provide contextual information about locations

- Assist with spatial orientation for accessibility

Learn more about smart glasses with camera features and their computer vision applications.

Example Use Case: A user wearing Meta Ray-Ban AI glasses at a museum looks at a painting. The AI instantly identifies the artwork, provides artist biography, historical context, and related pieces—without any voice command or manual search.

2. Natural Language Processing & Voice AI

What it does: Advanced voice assistants powered by large language models (GPT-4, Gemini, Claude) enable natural conversations and complex queries in AI-powered glasses.

Key capabilities:

Conversational AI Assistants

- Natural dialogue (not just commands like “Hey Siri”)

- Multi-turn conversations with context retention

- Answer complex questions using visual context

- Creative tasks (write emails, generate ideas, solve problems)

Voice Commands

- Hands-free device control (phone, smart home, car)

- Navigate menus and settings without touching glasses

- Initiate calls, send messages, set reminders

- Control media playback and volume

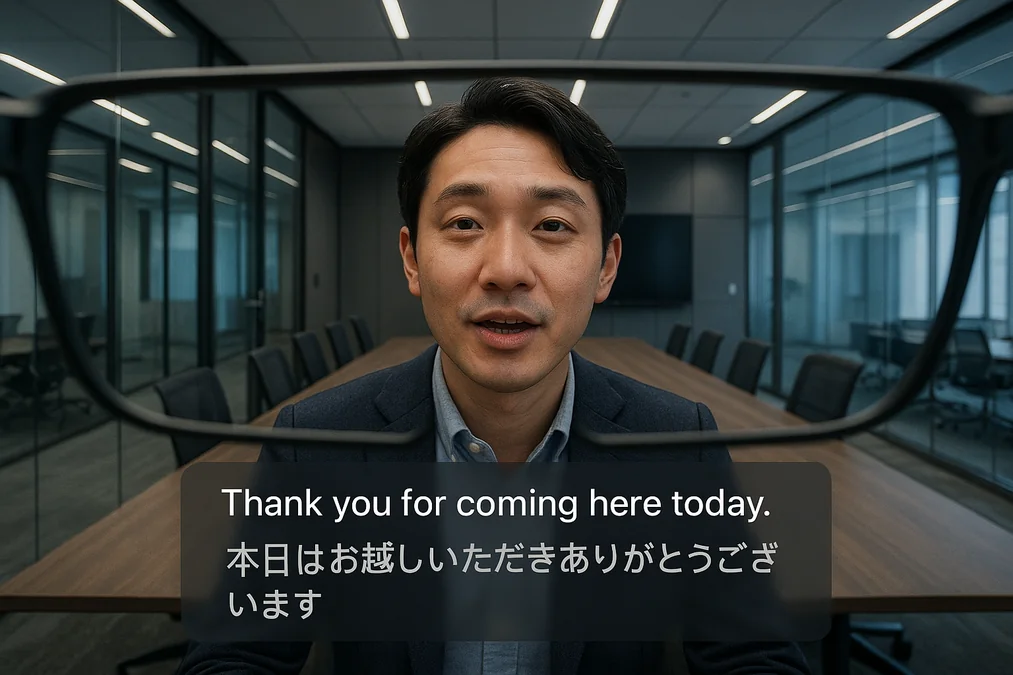

Real-Time Translation

- Translate conversations in 40+ languages

- Subtitle foreign speech in your field of view

- Two-way translation for international communication

- Context-aware translation (formal vs. casual language)

Discover detailed real-time translation capabilities in our specialized guide.

Transcription & Note-Taking

- Live transcription of meetings and lectures

- Speaker identification in multi-person conversations

- Auto-generate summaries and action items

- Export transcripts to productivity apps

Example Use Case: A business traveler wearing AI smart glasses in Tokyo asks “What’s this dish?” while looking at a menu. The AI identifies it as “Okonomiyaki,” provides pronunciation help, explains ingredients, suggests similar dishes, and asks if they’d like reservation assistance—all through natural conversation.

3. Contextual Awareness & Predictive Intelligence

What it does: Artificial intelligence in smart glasses analyzes your location, time, activity, and visual context to provide relevant information without explicit requests.

Key capabilities:

Environmental Context

- Understand where you are (office, gym, restaurant, airport)

- Adapt AI behavior based on setting (quiet notifications in meetings)

- Provide location-specific assistance (gate changes at airports)

- Historical context (remember previous visits to locations)

Activity Recognition

- Detect what you’re doing (cooking, driving, exercising, working)

- Offer relevant assistance (recipe steps while cooking, traffic alerts while driving)

- Adjust interface based on activity (larger text during exercise)

- Safety features (driving mode disables distracting notifications)

Behavioral Learning

- Learn your routines and preferences

- Anticipate information needs (weather before morning runs)

- Suggest optimizations (faster routes, better schedules)

- Personalize AI responses based on communication style

Proactive Assistance

- Calendar reminders with travel time calculations

- Suggest relevant information based on conversations

- Recommend actions based on visual cues (buy milk when seeing empty fridge)

- Health reminders (posture alerts, break suggestions)

Example Use Case: You’re wearing AI smart glasses and walking toward a coffee shop at 8:15 AM (your usual time). Before you ask, the AI displays: “Your usual order: Grande Latte. Want me to place it ahead? Estimated 3-minute wait.”

4. Multimodal AI Integration

What it does: Smart glasses with AI combine visual, audio, and sensor data to create comprehensive understanding of situations.

Key capabilities:

Visual + Voice Queries

- “What’s wrong with this?” while looking at a broken appliance

- “How much is this?” while pointing at a product

- “Who is this person?” when meeting someone

- “How do I use this?” while holding an unfamiliar tool

Sensor Fusion

- GPS + visual landmarks for accurate navigation

- Accelerometer + computer vision for fall detection

- Microphone + lip reading for better speech recognition in noise

- Heart rate + activity context for health insights

Cross-Device Intelligence

- Sync with phone to understand full context (recent calls, messages, searches)

- Control smart home based on visual cues (dim lights when watching TV)

- Share visual context with car navigation (identify parking spots)

- Collaborate with other AI devices (pass tasks to smart speakers)

Example Use Case: You’re assembling IKEA furniture and struggling with a step. You look at the pieces and say “I don’t understand this part.” Your AI-powered glasses analyze the parts visually, reference the instruction manual, and display 3D animated assembly guidance overlaid on the actual pieces.

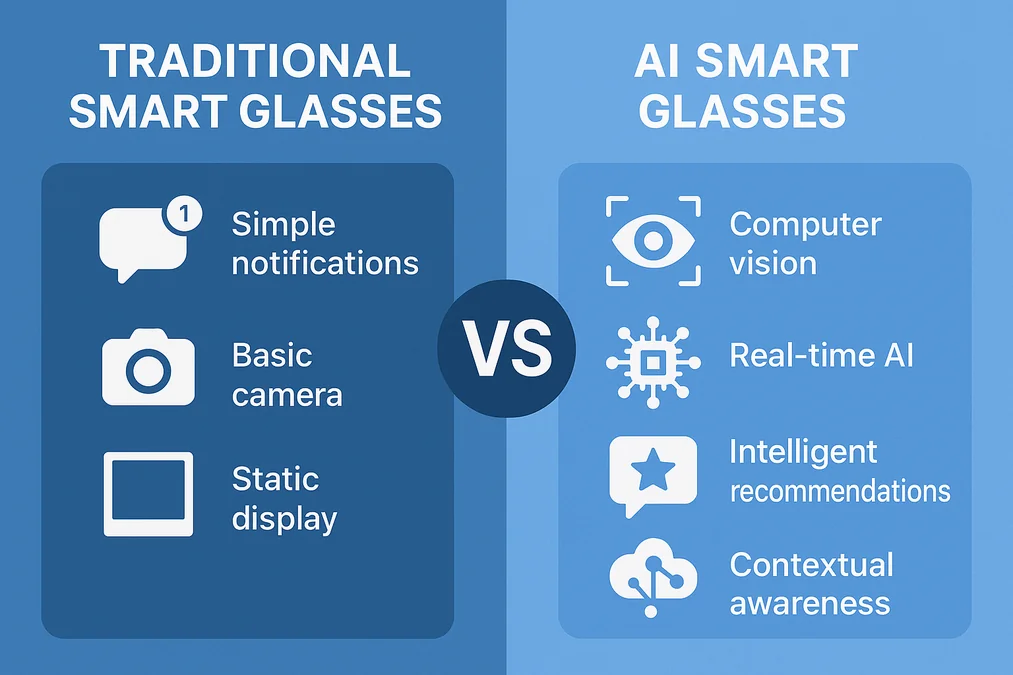

AI Smart Glasses vs Traditional Smart Glasses: Key Differences

Understand the difference between AR smart glasses technology and AI-powered models.

Core Differences Explained

| Feature | Traditional Smart Glasses | AI Smart Glasses | Advantage |

|---|---|---|---|

| Information Display | Static overlays, notifications | Dynamic, context-aware content | AI adapts to needs |

| Interaction | Manual commands (“show me…”) | Proactive assistance (anticipates needs) | Reduces cognitive load |

| Visual Understanding | Camera records video/photos | Computer vision analyzes scenes in real-time | Intelligent interpretation |

| Voice Control | Basic commands (“call John”) | Natural conversation with AI assistant | More intuitive |

| Translation | Manual app activation | Automatic detection + real-time subtitle overlay | Seamless multilingual |

| Navigation | Follow GPS arrows | Understand visual landmarks + provide context | Smarter routing |

| Learning Ability | Fixed functionality | Improves with use (personalization) | Gets better over time |

| Search | Type or speak query | Visual search (“What’s this I’m looking at?”) | Hands-free discovery |

| Decision Support | Present information | Analyze options + recommend best choice | Intelligent guidance |

Real-World Comparison Example

Scenario: You’re shopping for a laptop at an electronics store.

Traditional Smart Glasses Experience:

- Look at laptop → manually take photo

- Say “Search for Dell XPS 13 reviews”

- Read results on small display

- Manually compare prices on different websites

- Make decision based on limited information

AI Smart Glasses Experience:

- Look at laptop → AI automatically identifies “Dell XPS 13 (2024)”

- Instantly displays:

- Expert review scores (8.4/10 average)

- Price comparison (this store vs. 5 competitors)

- “Better deal online: $150 cheaper on Amazon, 2-day shipping”

- Technical specs vs. your requirements (from previous conversations)

- AI recommendation: “Good match for your needs, but consider XPS 15 for video editing”

- Ask follow-up questions naturally: “What about battery life?”

- AI provides answer with visual comparison chart

- Decision made in 30 seconds vs. 10+ minutes

Key Insight: Traditional smart glasses require you to think about how to get information. AI smart glasses think about what information you need.

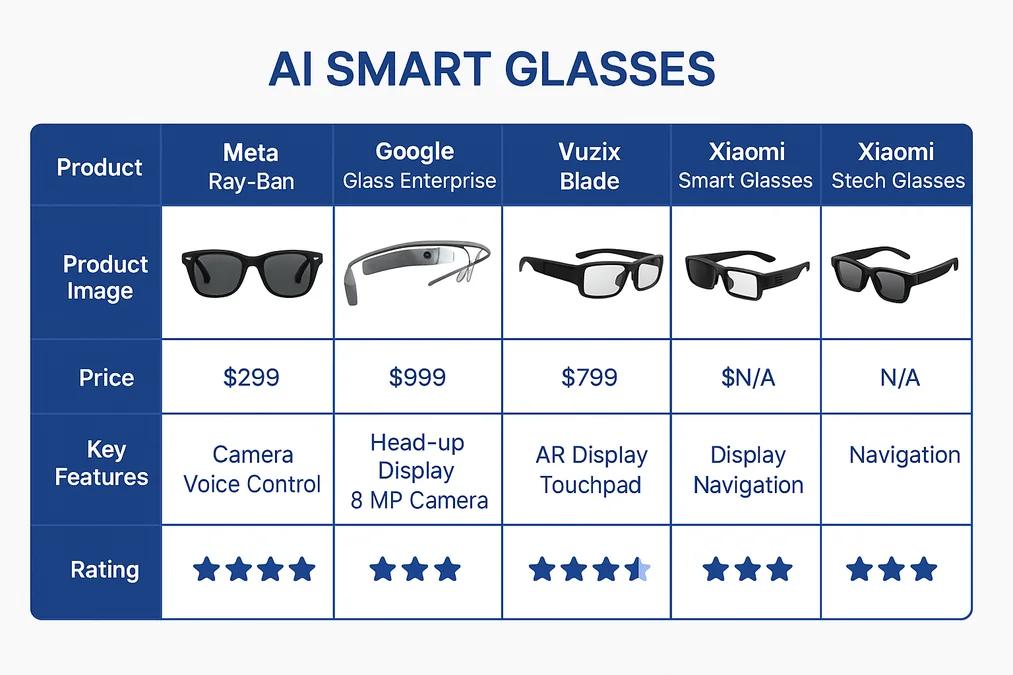

Best AI Smart Glasses 2025: Meta Ray-Ban vs Google Glass vs Vuzix

See how these models rank in our best smart glasses 2025 comparison.

Meta Ray-Ban Smart Glasses with AI

Price: $299 (Wayfarer style) | $329 (Aviator style)

AI Model: Meta AI (Llama 3-powered) + visual intelligence

Best For: Daily wear, social media creators, casual AI assistance

AI Features:

- ✅ Visual search: “Hey Meta, what am I looking at?” identifies objects, landmarks, products

- ✅ Real-time translation: Translate text in field of view (40+ languages)

- ✅ Conversational AI: Multi-turn dialogue with Meta AI assistant

- ✅ Voice control: Hands-free photo/video capture with AI editing

- ✅ Live streaming: Share POV with AI-generated captions

- ✅ Object recognition: Food items, animals, plants, products, logos

Limitations:

- ❌ No AR display (audio and voice-only AI interaction, plus camera)

- ❌ 4-hour battery with AI features active

- ❌ Requires smartphone connection (no standalone mode)

- ❌ Limited enterprise features

User Testimonial: “I use the visual search feature constantly—shopping, museums, hiking. The AI explains everything I look at without disrupting conversation.” — Sarah M., Tech Reviewer (4.5/5 stars, 12,000+ reviews)

Best Use Cases:

- Travel (identify landmarks, translate signs, navigation assistance)

- Shopping (price comparison, product research, style recommendations)

- Social content creation (AI-powered captions, editing suggestions)

- Learning (identify plants, animals, historical sites)

ROI: At $299, these are the most affordable AI-powered glasses with mainstream appeal. Ideal for consumers exploring AI wearables without enterprise pricing.

Google Glass Enterprise Edition 2 + AI

Price: $999 (device) + $50/month AI platform subscription

AI Model: Gemini Pro + Google Cloud Vision AI

Best For: Industrial work, field service, healthcare, warehouses

Explore specific smart glasses for manufacturing applications.

AI Features:

- ✅ Computer vision for quality control: Detect defects in manufacturing (98% accuracy)

- ✅ Predictive maintenance: AI analyzes equipment visually, predicts failures

- ✅ Workflow optimization: AI suggests more efficient task sequences

- ✅ Voice-activated documentation: Speak reports, AI formats and files them

- ✅ Expert guidance: Remote specialists see your POV, AI highlights their instructions

- ✅ Safety alerts: Detect hazards (hot surfaces, moving machinery, PPE compliance)

Standout Features:

- Hands-free inspection: AI automates 70% of quality checks (Boeing case study)

- Medical assistance: HIPAA-compliant AI for patient record access, drug interaction warnings

- Real-time data overlay: AI pulls relevant information from ERP systems based on visual context

- Offline AI: Essential computer vision functions work without internet

Enterprise ROI Example: Boeing deployed 1,200 AI smart glasses units with AI-powered quality inspection. Results:

- Inspection time: -50% (10 min → 5 min per aircraft section)

- Defect detection rate: +32% (AI catches issues humans miss)

- Training time for new inspectors: -60% (AI provides real-time guidance)

- Annual savings: $4.8M across 787 production line

Best Use Cases:

- Manufacturing quality control

- Field service diagnostics (HVAC, telecom, utilities)

- Healthcare (hands-free EMR access, medication verification)

- Warehouse operations (AI-guided picking, inventory counting)

Vuzix Blade 2 with AI Platform

Price: $1,299 (standard) | $1,499 (enterprise + AI bundle)

AI Model: Multi-platform (Amazon Alexa, custom AI integration via SDK)

Best For: Office workers, healthcare, logistics, field sales

AI Features:

- ✅ Visual workflow guidance: AI displays step-by-step instructions based on what you see

- ✅ Document scanning: AI extracts data from forms, invoices, IDs

- ✅ Facial recognition: Remember names in meetings (enterprise privacy compliant)

- ✅ Voice-to-text: AI transcribes conversations with speaker identification

- ✅ Vision picking: AI-guided warehouse order fulfillment (15% faster than RF guns)

Unique Features:

- Prescription lens compatible: AI adjusts display focus based on Rx

- Lightweight design: 85g (most comfortable for 8+ hour shifts)

- Multi-AI support: Switch between Alexa, Google Assistant, or custom enterprise AI

- Developer-friendly: Open SDK for custom AI applications

Healthcare Case Study: Kaiser Permanente pilot (500 nurses)

- Medication verification: AI scans drug labels, checks against patient records (99.97% accuracy)

- Patient identification: Facial recognition + wristband scan (eliminates wrong-patient errors)

- Hands-free charting: Voice-to-text AI reduces documentation time by 45%

- Result: Nurses spend 2.3 additional hours per shift on patient care vs. paperwork

Best Use Cases:

- Healthcare (medication admin, patient monitoring)

- Warehousing (vision picking, inventory management)

- Field sales (CRM data display, product demos)

- Office work (meeting transcription, calendar management)

AI-Powered Smart Glasses in Work Scenarios

Explore comprehensive smart glasses for work environments deployment strategies.

1. Visual Quality Inspection (Manufacturing)

The Problem: Human inspectors miss 5-8% of defects due to fatigue, complexity, and time pressure.

AI Solution:

- Computer vision analysis: AI scans parts in real-time, compares to CAD models

- Defect highlighting: AR overlay circles cracks, scratches, misalignments

- Severity assessment: AI categorizes defects (cosmetic vs. critical)

- Automated reporting: Voice command “Log defect” captures photo, location, timestamp

Real Data: Siemens Energy uses AI smart glasses for turbine blade inspection:

- Inspection time: 45 min → 18 min per blade (-60%)

- Defect detection: +41% (AI catches micro-cracks invisible to naked eye)

- False positive rate: -73% (AI reduces unnecessary rework)

- Inspector training: 6 weeks → 2 weeks (AI provides real-time guidance)

2. Remote Expert Assistance with AI Enhancement

The Problem: Technicians in the field lack expertise for complex repairs, waiting hours for remote expert availability.

AI Solution with smart glasses with AI:

- Visual problem diagnosis: AI analyzes equipment, suggests likely causes

- Pre-expert triage: AI walks technician through basic troubleshooting before calling expert

- Smart annotations: When expert joins, AI highlights what they’re pointing at

- Knowledge capture: AI converts expert guidance into future training materials

Real Data: PG&E (utility company) AI-powered glasses deployment:

- Expert calls: -55% (AI resolves simple issues without human expert)

- Average repair time: 120 min → 68 min

- First-time fix rate: 67% → 89%

- Expert productivity: Each expert now supports 3× more technicians (AI handles initial triage)

3. Medical Diagnosis Support

The Problem: Doctors need hands-free access to patient data, drug interactions, and diagnostic support during procedures.

AI Solution:

- Patient data overlay: AI displays vitals, allergies, medication list in field of view

- Drug interaction warnings: AI alerts if prescribing conflicting medications

- Diagnostic suggestions: AI analyzes symptoms, suggests differential diagnoses

- Procedure guidance: AI displays step-by-step instructions for rare procedures

Real Data: Cleveland Clinic surgical team pilot (85 surgeons):

- Surgical prep time: -22% (AI provides checklist confirmation)

- Medication errors: -67% (AI catches drug interactions)

- Rare procedure confidence: +48% (AI provides real-time guidance)

- Documentation time: -50% (voice dictation with AI formatting)

Privacy Note: All medical AI smart glasses must be HIPAA-compliant with end-to-end encryption and audit logging.

4. Multilingual Customer Service

The Problem: Language barriers reduce service quality in international settings (hotels, airports, retail).

AI Solution:

- Real-time translation: Customer speaks Spanish, employee sees English subtitles (and vice versa)

- Cultural context: AI suggests culturally appropriate responses

- Product information: AI translates product details, menus, signage instantly

- Accent assistance: AI improves speech recognition for non-native speakers

Real Data: Hilton Hotels pilot (12 properties, 450 staff):

- Guest satisfaction: +18% (measured via post-stay surveys)

- Service speed: +12% (no waiting for bilingual staff)

- Upsell conversion: +23% (staff can explain premium services in guest’s language)

- Staff confidence: 89% report feeling more comfortable serving international guests

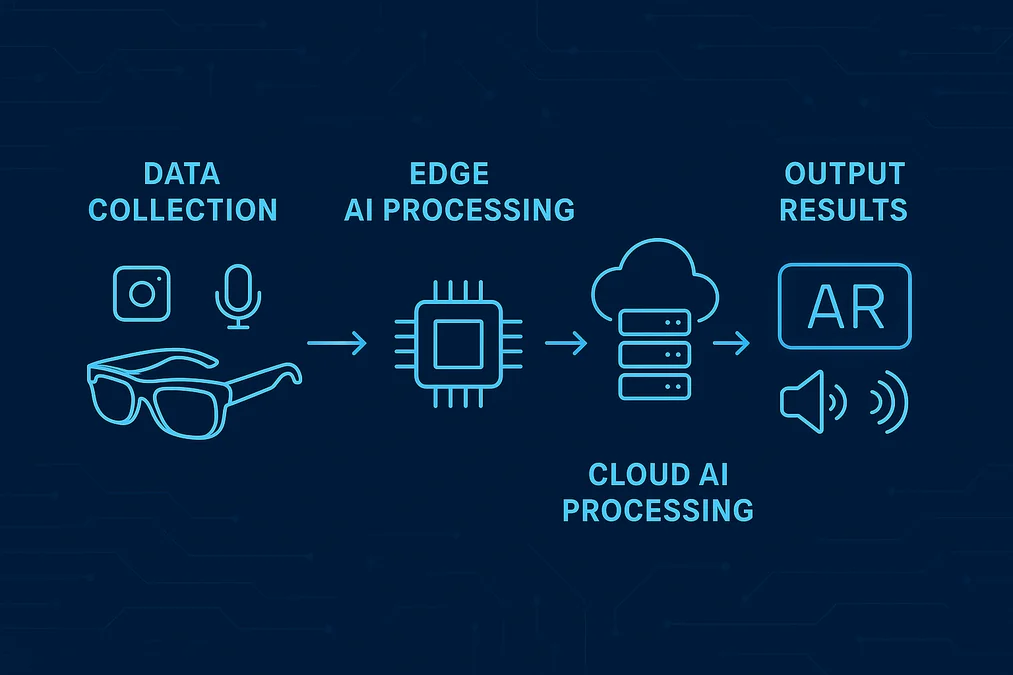

How AI Powered Smart Glasses Work: Technology & Processing Pipeline

The AI Processing Pipeline (Simplified)

Step 1: Data Capture

- Cameras: 2-4 cameras (RGB + depth sensing) capture 60+ frames per second

- Microphones: 4-mic array with noise cancellation for voice input

- Sensors: Accelerometer, gyroscope, magnetometer for orientation

Step 2: Edge AI Processing (On-Device)

- Lightweight AI models: Optimized neural networks run on ARM/Snapdragon processors

- Computer vision: Object detection, text recognition (OCR), face detection

- Voice processing: Wake word detection, basic commands

- Privacy-first: Sensitive data (faces, locations) processed locally, not sent to cloud

Step 3: Cloud AI Processing (When Needed)

- Complex queries: Natural language understanding, conversational AI (GPT-4, Gemini)

- Large models: Visual search against billions of images

- Real-time translation: Neural machine translation for 40+ languages

- Data synthesis: Combine visual + voice + context for comprehensive answers

Step 4: Output

- Visual: AR overlays, text, icons, 3D models (if equipped with display)

- Audio: Bone conduction or open-ear speakers for voice responses

- Haptic: Vibration feedback for notifications

Latency: Edge AI responses: <100ms | Cloud AI responses: 500-2000ms (depending on network)

Privacy & Security in AI Smart Glasses

Understand required smart glasses safety standards and privacy regulations.

Key Concerns:

- 🔴 Facial recognition without consent

- 🔴 Recording in private spaces

- 🔴 Data leaks (visual information is sensitive)

- 🔴 AI bias (facial recognition accuracy varies by demographics)

Industry Solutions:

- ✅ Visual indicators: LED lights when camera active

- ✅ Data minimization: Only send necessary data to cloud (e.g., text from OCR, not full images)

- ✅ User consent: Require permission before recording others

- ✅ Encryption: End-to-end encryption for all transmitted data

- ✅ Local processing: Process sensitive data (faces, locations) on-device only

- ✅ Audit logs: Enterprise versions log all data access for compliance

- ✅ Opt-out facial recognition: Many models disable facial recognition by default

Regulatory Compliance:

- GDPR (Europe): Right to access, delete personal data captured by AI glasses

- CCPA (California): Disclosure requirements for data collection

- HIPAA (Healthcare): Strict encryption + audit requirements for medical AI glasses

- BIPA (Illinois): Biometric data (facial recognition) requires explicit consent

Future of AI Smart Glasses: 2025-2030 Trends

1. Multimodal Foundation Models (2025-2026)

What’s Coming: GPT-5, Gemini Ultra, Claude 4—AI models that natively understand images, video, audio simultaneously.

Impact on AI Smart Glasses:

- True contextual intelligence: AI understands “What should I do about this?” while looking at a leaking pipe (visual context + world knowledge + action planning)

- Zero-shot learning: AI handles novel situations without specific training

- Reasoning abilities: AI explains its recommendations (“I suggest the blue shirt because you have a client meeting at 2 PM and wore the red one yesterday”)

2. On-Device Large Language Models (2026-2027)

What’s Coming: Compressed AI models small enough to run entirely on smart glasses with AI processors.

Impact:

- Privacy: Sensitive queries never leave device

- Speed: <50ms response time (vs. 500-2000ms cloud latency)

- Offline capability: Full AI assistant works without internet

- Cost: No cloud API fees (reduce operating cost by 80%)

Technical Challenge: Current LLMs require 50GB+ storage. Target: 2GB models with 80% capability retention.

3. Persistent Spatial Memory (2027-2028)

What’s Coming: AI-powered glasses remember physical spaces and objects over time.

Examples:

- Leave keys on table → Days later, ask “Where are my keys?” → AI remembers and guides you

- Read book → Weeks later, ask “What was that book about?” → AI recalls the cover it saw

- Meet person → Next encounter, AI whispers name reminder before you embarrass yourself

Privacy Control: Users control what AI remembers, with automatic expiration settings.

4. Emotional Intelligence AI (2028-2030)

What’s Coming: Artificial intelligence in glasses detect and respond to human emotions appropriately.

Applications:

- Accessibility: Help individuals with autism interpret social cues

- Mental health: Detect signs of stress, suggest breaks or breathing exercises

- Sales: Guide conversations based on customer emotional state (interest vs. skepticism)

- Meetings: Provide real-time feedback on your presentation impact

Ethical Concerns: This capability raises significant privacy and manipulation concerns—expect heavy regulation.

5. Brain-Computer Interface Integration (2030+)

What’s Coming: Neural interfaces (like Neuralink) combined with AI smart glasses.

Potential:

- Thought-to-text: Think a message, AI glasses send it

- Visual memory recording: AI saves what you see, searchable by thought later

- Augmented cognition: AI assists with complex mental tasks (math, memory recall)

Timeline: Research stage—commercial availability 2030+.

Frequently Asked Questions About AI Smart Glasses

What can AI smart glasses do that smartphones can’t?

AI smart glasses provide hands-free, context-aware assistance while keeping your attention on the real world. Unlike smartphones, smart glasses with AI:

- Don’t require you to look down: Information appears in your field of view

- Understand what you’re looking at: Visual context eliminates the need to describe or photograph

- Work while hands are occupied: Cooking, exercising, working with tools

- Provide socially appropriate interaction: No antisocial phone staring in conversations

- Offer spatial computing: AR overlays interact with physical objects (not possible on flat screens)

Example: A smartphone requires you to stop walking, take out phone, open app, type/speak query, read results. AI smart glasses let you ask “What’s this building?” while walking, get instant answer via audio, continue walking.

Are AI smart glasses safe for privacy?

Safety depends on manufacturer, features, and user behavior:

Built-in Privacy Features:

- ✅ Visual recording indicators (LED lights)

- ✅ Local processing for sensitive data (faces, locations)

- ✅ Encryption for cloud communication

- ✅ User-controlled data deletion

Risks to Be Aware Of:

- ⚠️ Facial recognition without consent (many models disable by default)

- ⚠️ Recording in private spaces (bathrooms, locker rooms—illegal in most jurisdictions)

- ⚠️ Third-party app data collection (read privacy policies)

- ⚠️ Hacking vulnerabilities (keep firmware updated)

Best Practices:

- Choose models with strong privacy features (local processing, minimal cloud data)

- Disable facial recognition in default settings

- Respect others: Announce when recording in shared spaces

- Review and delete captured data regularly

Legal Note: Some jurisdictions (e.g., Illinois, parts of Europe) require explicit consent before recording others’ biometric data (faces, voices).

How long does the battery last on AI smart glasses?

Typical battery life (AI features active):

| Model | Battery Life | Usage Pattern |

|---|---|---|

| Meta Ray-Ban AI | 4-6 hours | Moderate AI usage (visual search, translation every 10-15 min) |

| Google Glass Enterprise 2 | 8-10 hours | Industrial use (continuous AI workflow guidance) |

| Vuzix Blade 2 | 6-8 hours | Office/warehouse (intermittent AI queries) |

| Xiaomi Smart Glasses | 5-7 hours | Consumer use (smart home, navigation, calls) |

Learn more about smart glasses battery life optimization.

Battery drain factors:

- 🔴 High drain: Continuous computer vision, live video streaming, AR overlays

- 🟡 Moderate drain: Frequent voice queries, real-time translation, navigation

- 🟢 Low drain: Passive mode (notifications only, AI on-demand)

Power-saving tips:

- Disable continuous computer vision (activate only when needed)

- Use edge AI features (on-device processing) vs. cloud AI when possible

- Lower display brightness (if AR display model)

- Turn off unnecessary sensors (GPS when indoors)

Future outlook: 2026 models promise 12+ hour battery with more powerful AI (improved processor efficiency).

Can AI smart glasses work offline?

Yes, partially—depends on the AI feature:

Works Offline (Edge AI on device):

- ✅ Basic object recognition (pre-trained categories)

- ✅ Text recognition (OCR) in common languages

- ✅ Voice commands for device control (play music, call contacts)

- ✅ Navigation with downloaded maps

- ✅ Fitness tracking and health monitoring

- ✅ Offline translation (pre-downloaded language packs, limited to common phrases)

Requires Internet (Cloud AI):

- ❌ Visual search (identify unknown objects, landmarks)

- ❌ Conversational AI (GPT-4, Gemini—complex natural language)

- ❌ Real-time translation (full neural machine translation)

- ❌ Live information (weather, news, stock prices)

- ❌ Social media features (posting, live streaming)

Enterprise Models: Google Glass Enterprise 2 offers more offline capabilities for industrial use (quality inspection, workflow guidance work without internet).

Future improvement: On-device large language models (2026+) will enable most AI features offline.

What’s the difference between AR glasses and AI smart glasses?

Many people confuse these terms—here’s the distinction:

AR Glasses (Augmented Reality):

- Focus: Visual display technology

- Core feature: Overlay digital content on real world (images, 3D objects, text)

- Intelligence: May or may not have AI (early AR glasses were “dumb displays”)

- Examples: Microsoft HoloLens (AR display, limited AI), Magic Leap (AR-focused)

AI Smart Glasses (Artificial Intelligence):

- Focus: Intelligent assistance

- Core feature: Understand context, provide recommendations, anticipate needs

- Display: May or may not have AR display (Meta Ray-Ban has AI but no display)

- Examples: Meta Ray-Ban (AI, no display), Google Glass (AI + display)

The Overlap: AI-powered AR glasses combine both:

- AR display for visual overlays

- AI for understanding what to display and when

- Example: You look at a machine → AI recognizes it’s broken → AR overlay shows repair instructions

In practice: Most modern “smart glasses” have some AI, but not all have AR displays. The trend is converging toward AI-powered AR glasses that do both.

How much do AI smart glasses cost?

2025 pricing by category:

| Category | Price Range | Examples | Best For |

|---|---|---|---|

| Consumer AI | $299-$499 | Meta Ray-Ban, Xiaomi | Daily wear, social, travel |

| Professional | $999-$1,499 | Vuzix Blade 2, Lenovo ThinkReality | Office work, healthcare, light industrial |

| Enterprise AI | $1,500-$3,000 | Google Glass Enterprise 2, RealWear | Manufacturing, field service, hazardous environments |

| Developer Kits | $2,000-$5,000 | Magic Leap 2, Snap Spectacles (AR) | Custom AI app development |

See our complete smart glasses price comparison guide.

Total Cost of Ownership (3-year enterprise):

- Device: $2,000

- AI platform subscription: $600/year × 3 = $1,800

- Software integrations: $2,000 (one-time)

- Training: $500 (one-time)

- Support/maintenance: $300/year × 3 = $900

- Total: $7,200 per device over 3 years

ROI Justification: Enterprises report 12-18 month payback through productivity gains (20-40% time savings in target workflows).

Consumer advice: Start with Meta Ray-Ban ($299) to explore AI features before investing in expensive enterprise models.

Conclusion: Are AI Smart Glasses Ready for You?

AI smart glasses have crossed the threshold from futuristic concept to practical tool in 2025. The question isn’t whether AI-powered eyewear will become mainstream—it’s whether you’re ready to adopt them now or wait for the next generation.

You should consider AI smart glasses now if:

- ✅ You work in hands-free environments (manufacturing, field service, healthcare)

- ✅ You frequently need information while on the move (travel, sales, warehousing)

- ✅ You value multilingual communication (international business, hospitality)

- ✅ You’re an early adopter comfortable with new AI interfaces

- ✅ Your work involves visual tasks that could benefit from AI assistance (inspection, diagnostics, assembly)

You should wait for next-generation models if:

- ⏳ Battery life is critical (current 4-8 hours may be insufficient)

- ⏳ You need all-day AR display (current displays are limited field of view)

- ⏳ Budget is tight (prices will decrease 30-40% by 2027)

- ⏳ You want fully offline AI (on-device LLMs arrive 2026-2027)

- ⏳ You need specific enterprise integrations not yet available

The bottom line: For consumers, Meta Ray-Ban AI glasses ($299) offer the best value to explore AI features. For enterprises, Google Glass Enterprise 2 ($999) delivers measurable ROI in hands-free workflows. The technology is mature enough for practical use today with artificial intelligence, with exciting improvements coming soon.